Outer product

- For "outer product" in geometric algebra, see exterior product.

In linear algebra, the outer product typically refers to the tensor product of two vectors. The result of applying the outer product to a pair of vectors is a matrix. The name contrasts with the inner product, which takes as input a pair of vectors and produces a scalar.

The outer product of vectors can be also regarded as a special case of the Kronecker product of matrices.

Some authors use the expression "outer product of tensors" as a synonym of "tensor product". The outer product is also a higher-order function in some computer programming languages such as APL and Mathematica.

Contents |

Definition

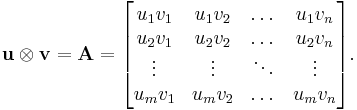

Given a vector  with m elements and a vector

with m elements and a vector  with n elements, their outer product

with n elements, their outer product  is defined as the

is defined as the  matrix

matrix  obtained by multiplying each element of

obtained by multiplying each element of  by each element of

by each element of  :

:

Note that

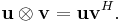

For complex vectors, it is customary to use the complex conjugate of  (denoted

(denoted  ). Namely, matrix

). Namely, matrix  is obtained by multiplying each element of

is obtained by multiplying each element of  by the complex conjugate of each element of

by the complex conjugate of each element of  .

.

Definition (matrix multiplication)

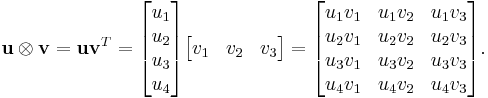

The outer product  as defined above is equivalent to a matrix multiplication

as defined above is equivalent to a matrix multiplication  , provided that

, provided that  is represented as a

is represented as a  column vector and

column vector and  as a

as a  column vector (which makes

column vector (which makes  a row vector). For instance, if

a row vector). For instance, if  and

and

For complex vectors, it is customary to use the conjugate transpose of  (denoted

(denoted  ):

):

Contrast with inner product

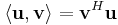

If m = n, then one can take the matrix product the other way, yielding a scalar (or  matrix):

matrix):

which is the standard inner product for Euclidean vector spaces, better known as the dot product. The inner product is the trace of the outer product.

Definition (abstract)

Let V and W be two vector spaces, and let W* be the dual space of W. Given a vector x ∈ V and y* ∈ W*, then the tensor product y* ⊗ x corresponds to the map A : W → V given by

Here y*(w) denotes the value of the linear functional y* (which is an element of the dual space of W) when evaluated at the element w ∈ W. This scalar in turn is multiplied by x to give as the final result an element of the space V.

Thus intrinsically, the outer product is defined for a vector and a covector; to define the outer product of two vectors requires converting one vector to a covector (in coordinates, transpose), which one can do in the presence of a bilinear form  generally taken to be a nondegenerate form (meaning this is an isomorphism) or more narrowly an inner product.

generally taken to be a nondegenerate form (meaning this is an isomorphism) or more narrowly an inner product.

If V and W are finite-dimensional, then the space of all linear transformations from W to V, denoted Hom(W,V), is generated by such outer products; in fact, the rank of a matrix is the minimal number of such outer products needed to express it as a sum (this is the tensor rank of a matrix). In this case Hom(W,V) is isomorphic to W* ⊗ V.

Contrast with inner product

If  , then one can also pair the covector w*∈V* with the vector v∈V via

, then one can also pair the covector w*∈V* with the vector v∈V via  , which is the duality pairing between V and its dual, sometimes called the inner product.

, which is the duality pairing between V and its dual, sometimes called the inner product.

Definition (tensor multiplication)

The outer product on tensors is typically referred to as the tensor product. Given a tensor a with rank q and dimensions (i 1, ..., i q), and a tensor b with rank r and dimensions (j 1, ..., j r), their outer product c has rank q+r and dimensions (k 1, ..., k q+r) which are the i dimensions followed by the j dimensions. For example, if A has rank 3 and dimensions (3, 5, 7) and B has rank 2 and dimensions (10, 100), their outer product c has rank 5 and dimensions (3, 5, 7, 10, 100). If A[2, 2, 4] = 11 and B[8, 88]= 13 then C[2, 2, 4, 8, 88] = 143. .

To understand the matrix definition of outer product in terms of the definition of tensor product:

- The vector v can be interpreted as a rank 1 tensor with dimension (M), and the vector u as a rank 1 tensor with dimension (N). The result is a rank 2 tensor with dimension (M, N).

- The rank of the result of an inner product between two tensors of rank q and r is the greater of q+r-2 and 0. Thus, the inner product of two matrices has the same rank as the outer product (or tensor product) of two vectors.

- It is possible to add arbitrarily many leading or trailing 1 dimensions to a tensor without fundamentally altering its structure. These 1 dimensions would alter the character of operations on these tensors, so any resulting equivalences should be expressed explicitly.

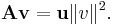

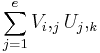

- The inner product of two matrices V with dimensions (d, e) and U with dimensions (e, f) is

where

where  and

and  , For the case where e =1, the summation is trivial (involving only a single term).

, For the case where e =1, the summation is trivial (involving only a single term).

The term "rank" is used here in its tensor sense, and should not be interpreted as matrix rank.

Applications

The outer product is useful in computing physical quantities (e.g., the tensor of inertia), and performing transform operations in digital signal processing and digital image processing. It is also useful in statistical analysis for computing the covariance and auto-covariance matrices for two random variables.

See also

Products

Duality

- Complex conjugate

- Conjugate transpose

- Transpose

- Bra-ket notation for outer product

|

|||||